High Availability

| (21 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | (Click here for [[FreeSBC:1%2B1_Configuration|ProSBC High Availability and 1+1]]) | |

| − | + | High availability refers to the design of a system as well as its ability to continue operating following one or more component faults. Concepts that are associated with high availability include: | |

| − | The first prerequisite for enabling HA database functionality is to have a secondary server available running the MySQL database software. To configure that secondary server you would follow the same prerequisites for a normal installation of Toolpack on that machine. One thing to note with this change is that the IP address for the primary as well as the secondary server must now be made explicit ( | + | *fault tolerance (the ability for a given component to recover from a fault) |

| + | *redundancy (the presence of one or more back-up instances of a hardware or software component) | ||

| + | *hot-swappable (the ability to add, remove or change a system component without taking the overall system down and without compromising functionality | ||

| + | *scalability (the ability of a system to grow over time as well as to respond to unexpected spikes in usage without negatively impacting performance | ||

| + | |||

| + | |||

| + | == TelcoBridges and High Availability == | ||

| + | While TelcoBridges hardware can be purchased and configured to support high-availability (HA) requirements, this new release of [[Toolpack]] provides complete HA support in software as well, enabling complete end-to-end high availability. With Toolpack [[version 2.3]] and onwards, existing application-level redundancy is now complimented by redundancy of the core configuration database. With this release of Toolpack, the primary database can go down, while master versions of applications on the main server can keep running. Following a fault, they will simply refer to the secondary (backup) database. If the master host machine should encounter a fault, then the primary configuration database and all other master application instances will also go down. In this case, all standby application instances on the standby server as well as the configuration database become the new primary (master) instances. Finally, it is important to note that all applications become highly available by default once HA support has been turned on. | ||

| + | |||

| + | |||

| + | === HA available at various layer of the system === | ||

| + | There are a lot of different type of redundancy in a Toolpack system. Let's try to summarize them. | ||

| + | |||

| + | ==== IP Network (GW Control and Managment) (1+1) ==== | ||

| + | All communications between application<-->application, application<-->TMedia, TMedia<-->TMedia are done using redundant IP interfaces. This is built from the ground up in all of our code. | ||

| + | |||

| + | ==== IP Network (SIP Signaling) (1+1) ==== | ||

| + | For outgoing calls, the system can be configured such that you can use alternate addresses to reach the SIP Proxy, so if one network path is down, it will use the other one. For incoming calls, you can configure SIP stacks on two separate IP interfaces so that if one is not reachable by a peer, the second can be reached. Tmedia and FreeSBC also offer mechanisms such as virtual port/VLAN with associated IP interface and address, meaning many virtual ports/VLANs can be created on one physical port (or in case of Tmedia, two physical ports in bonding configuration) that each virtual port/VLAN can have its own IP address. Therefore, for outgoing and incoming SIP calls, different IP paths can act as redundancy on one physical interface as well as on two physical interfaces with bonding which Tmedia can further provide internal ports protection when one physical port is down. Should all voip ports are down on one unit, it requires the whole unit to be detected down before a failover to the other unit is taking place that replacing the same IP interfaces/addresses onto another unit and resuming the call traffic. | ||

| + | |||

| + | ==== IP Network (Voice Path) (1+1) ==== | ||

| + | We support redundant voice path for SIP calls. If one path is down, the other one will be selected for all new calls. If both paths are up, they can be used in load sharing. Note however, that if a path is lost, the active calls currently using that path will not be switched automatically to the other path (this would require sending a SIP re-INVITE because the IP address of the second interface is not the same as the address of the first interface. Tmedia also offers IP bonding that a virtual IP can be created on two physical interface thus protecting against any interface down while still using the same IP address. The resuming of call traffic should all voip ports are down follow the same mechanism as described in IP Network (SIP signalling)(1+1) above. | ||

| + | |||

| + | ==== SS7 Redundancy (N+1) ==== | ||

| + | At the MTP2 level, you can have redundancy by having links reaching a destination spread across at least 2 TMedia units, so that if one TMedia fails you can still talk to the destination through the link(s) on the other TMedia unit. | ||

| + | Full load-sharing at the MTP3 layer. MTP3 processing is distributed on all TMedia of a system. | ||

| + | Active-Standby scheme available at the ISUP, SCCP, and TCAP layer. | ||

| + | Bottom line: no loss of calls when any TMedia crash (the Standby stack will take control and keep the active call opened) | ||

| + | |||

| + | ==== ISDN, CAS ==== | ||

| + | Directly linked to the physical trunk and we do not support HA at the trunk level. | ||

| + | |||

| + | ==== Automatic protection mechanism on the TMedia ==== | ||

| + | On board process monitoring: dead-lock or crashed processes are automatically detected and the unit is rebooted to ensure minimal downtime. | ||

| + | HW watch dog: When a Toolpack system is active, the TMedia unit is polled continuously by the System Manager. If this polling stops, it means the TMedia lost contact with the Toolpack applications and it will reboot automatically if communication is not restored in a timely manner. | ||

| + | |||

| + | ==== Application Level (1+1) ==== | ||

| + | All Toolpack applications support Active-Standby mode so you can have two hosts, each having a running instance of our Toolpack application. If the active server crashes, the application on the standby server will take the relay. Furthermore, on each host, Toolpack installs a service that monitors all Toolpack applications and that automatically restarts crashed or deadlocked applications. | ||

| + | |||

| + | ==== Configuration Database HA (1+1) ==== | ||

| + | We also support HA at the database (DB) level. Toolpack can configure 2 DB servers and set up replication between them so that all changes made to the main DB are replicated on the backup DB. If the main DB is lost, Toolpack will automatically switch to using the backup DB. | ||

| + | |||

| + | |||

| + | === Prerequisites === | ||

| + | |||

| + | In case of two external hosts, | ||

| + | |||

| + | The first prerequisite for enabling HA database functionality is to have a secondary server available running the MySQL database software. To configure that secondary server you would follow the same prerequisites for a normal installation of Toolpack on that machine. One thing to note with this change is that the IP address for the primary, as well as the secondary server, must now be made explicit (using ‘localhost’ or ‘127.0.0.1’ is no longer permitted). You will also want to activate database replication functionality using MySQL’s procedures for achieving this. | ||

| Line 9: | Line 55: | ||

:[[Image:Enhanced-HA-overview.jpg]] | :[[Image:Enhanced-HA-overview.jpg]] | ||

| − | |||

| − | + | === Testing this feature === | |

| − | + | While we expect enhanced HA functionality to have a major positive impact on your operations, HA is like an insurance policy in that you hope you never have to use it and it is not something you necessarily want to test on production servers. Consequently, it is the area where we expect to spend significant testing efforts. We have identified the following items as being worthy of testing and we recommend that they be part of your testing efforts as well. | |

| − | * | + | |

| + | |||

| + | You should expect different effects on the system depending on the test operations performed. These can be divided into two broad categories. The first is where the system will NOT drop any calls and will still accept new incoming calls and calls that are currently being processed (transient calls). The second is when established calls will NOT be dropped but the system will not be able to accept transient calls. Please note that this ‘interruption’ is temporary in nature, lasting only so long as the the switchover; once the switchover is complete, any incoming calls will be accepted again. | ||

| + | |||

| + | |||

| + | '''MySQL operations''' (no effect on established calls, still able to accept transient calls) | ||

| + | |||

| + | * Shutdown the MySQL service on master | ||

| + | * Kill MySQL service on master | ||

| + | * Shutdown MySQL service on slave | ||

| + | * Kill MySQL service on slave | ||

| + | |||

| + | |||

| + | '''Toolpack operations''' | ||

| + | |||

| + | *Quit (graceful) master Toolpack service: (no effect on established calls, will drop transient calls) | ||

| + | *Quit (graceful) slave Toolpack service: (no effect on established calls, still able to accept transient calls) | ||

| + | |||

| + | |||

| + | '''Toolpack applications operations''' | ||

| + | |||

| + | * Master | ||

| + | **tboamapp | ||

| + | **toolpack_sys_manager (no effect on established calls, still able to accept transient calls) | ||

| + | **toolpack_engine (no effect on established calls, will drop transient calls) | ||

| + | **gateway (no effect on established calls, will drop transient calls) | ||

| + | *Slave | ||

| + | **tboamapp | ||

| + | **toolpack_sys_manager (no effect on established calls, still able to accept transient calls) | ||

| + | **toolpack_engine (no effect on established calls, still able to accept transient calls) | ||

| + | **gateway (no effect on established calls, still able to accept transient calls) | ||

| + | |||

| + | |||

| + | '''Host operations''' | ||

| + | |||

| + | *Master (no effect on established calls, will drop transient calls) | ||

| + | *Disconnect the network(s) | ||

| + | *Shutdown the server (or disconnect power) | ||

| + | *Slave (no effect on established calls, still able to accept transient calls) | ||

| + | *Disconnect the network(s) | ||

| + | *Shutdown the server | ||

| + | |||

| + | Following a switchover, you will want to test the following items and verify that there are no errors: | ||

| + | *Established calls are still open | ||

| + | *New incoming calls are accepted | ||

| + | *The system closes established calls | ||

| + | *You are able to switch configurations (this requires a configuration reload) | ||

| + | *You are able to change the state (Run / Don't Run) of an application | ||

| + | *You are able to enable / disable trunks (this requires a configuration reload) | ||

| + | *You are able to enable / disable stacks ( SS7, SIP, ISDN) (this requires a configuration reload) | ||

| + | *You are able to add/ remove / modify gateway routes (this requires a configuration reload) | ||

| + | *You are able to add / remove / modify gateway accounts (this requires a configuration reload) | ||

| + | === Known limitations === | ||

| + | We have identified the following limitations with the enhanced high availability support available in Toolpack v2.3. | ||

| + | *It is not always possible to recover from a double fault. For example, should both configuration databases go offline, any telephony applications that use these databases will cease to function correctly. | ||

| − | + | == References == | |

| + | [http://en.wikipedia.org/wiki/High_availability Wikipedia article] | ||

| − | + | [[category:Glossary]] | |

Latest revision as of 10:45, 14 September 2020

(Click here for ProSBC High Availability and 1+1)

High availability refers to the design of a system as well as its ability to continue operating following one or more component faults. Concepts that are associated with high availability include:

- fault tolerance (the ability for a given component to recover from a fault)

- redundancy (the presence of one or more back-up instances of a hardware or software component)

- hot-swappable (the ability to add, remove or change a system component without taking the overall system down and without compromising functionality

- scalability (the ability of a system to grow over time as well as to respond to unexpected spikes in usage without negatively impacting performance

Contents |

TelcoBridges and High Availability

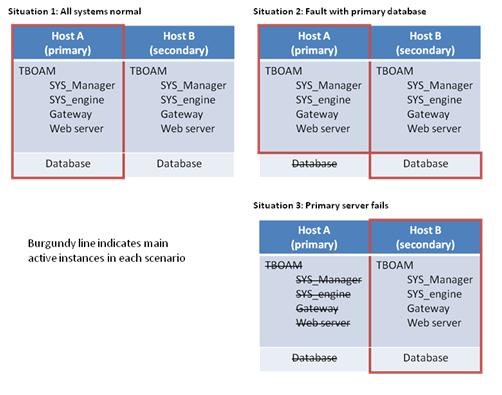

While TelcoBridges hardware can be purchased and configured to support high-availability (HA) requirements, this new release of Toolpack provides complete HA support in software as well, enabling complete end-to-end high availability. With Toolpack version 2.3 and onwards, existing application-level redundancy is now complimented by redundancy of the core configuration database. With this release of Toolpack, the primary database can go down, while master versions of applications on the main server can keep running. Following a fault, they will simply refer to the secondary (backup) database. If the master host machine should encounter a fault, then the primary configuration database and all other master application instances will also go down. In this case, all standby application instances on the standby server as well as the configuration database become the new primary (master) instances. Finally, it is important to note that all applications become highly available by default once HA support has been turned on.

HA available at various layer of the system

There are a lot of different type of redundancy in a Toolpack system. Let's try to summarize them.

IP Network (GW Control and Managment) (1+1)

All communications between application<-->application, application<-->TMedia, TMedia<-->TMedia are done using redundant IP interfaces. This is built from the ground up in all of our code.

IP Network (SIP Signaling) (1+1)

For outgoing calls, the system can be configured such that you can use alternate addresses to reach the SIP Proxy, so if one network path is down, it will use the other one. For incoming calls, you can configure SIP stacks on two separate IP interfaces so that if one is not reachable by a peer, the second can be reached. Tmedia and FreeSBC also offer mechanisms such as virtual port/VLAN with associated IP interface and address, meaning many virtual ports/VLANs can be created on one physical port (or in case of Tmedia, two physical ports in bonding configuration) that each virtual port/VLAN can have its own IP address. Therefore, for outgoing and incoming SIP calls, different IP paths can act as redundancy on one physical interface as well as on two physical interfaces with bonding which Tmedia can further provide internal ports protection when one physical port is down. Should all voip ports are down on one unit, it requires the whole unit to be detected down before a failover to the other unit is taking place that replacing the same IP interfaces/addresses onto another unit and resuming the call traffic.

IP Network (Voice Path) (1+1)

We support redundant voice path for SIP calls. If one path is down, the other one will be selected for all new calls. If both paths are up, they can be used in load sharing. Note however, that if a path is lost, the active calls currently using that path will not be switched automatically to the other path (this would require sending a SIP re-INVITE because the IP address of the second interface is not the same as the address of the first interface. Tmedia also offers IP bonding that a virtual IP can be created on two physical interface thus protecting against any interface down while still using the same IP address. The resuming of call traffic should all voip ports are down follow the same mechanism as described in IP Network (SIP signalling)(1+1) above.

SS7 Redundancy (N+1)

At the MTP2 level, you can have redundancy by having links reaching a destination spread across at least 2 TMedia units, so that if one TMedia fails you can still talk to the destination through the link(s) on the other TMedia unit. Full load-sharing at the MTP3 layer. MTP3 processing is distributed on all TMedia of a system. Active-Standby scheme available at the ISUP, SCCP, and TCAP layer. Bottom line: no loss of calls when any TMedia crash (the Standby stack will take control and keep the active call opened)

ISDN, CAS

Directly linked to the physical trunk and we do not support HA at the trunk level.

Automatic protection mechanism on the TMedia

On board process monitoring: dead-lock or crashed processes are automatically detected and the unit is rebooted to ensure minimal downtime. HW watch dog: When a Toolpack system is active, the TMedia unit is polled continuously by the System Manager. If this polling stops, it means the TMedia lost contact with the Toolpack applications and it will reboot automatically if communication is not restored in a timely manner.

Application Level (1+1)

All Toolpack applications support Active-Standby mode so you can have two hosts, each having a running instance of our Toolpack application. If the active server crashes, the application on the standby server will take the relay. Furthermore, on each host, Toolpack installs a service that monitors all Toolpack applications and that automatically restarts crashed or deadlocked applications.

Configuration Database HA (1+1)

We also support HA at the database (DB) level. Toolpack can configure 2 DB servers and set up replication between them so that all changes made to the main DB are replicated on the backup DB. If the main DB is lost, Toolpack will automatically switch to using the backup DB.

Prerequisites

In case of two external hosts,

The first prerequisite for enabling HA database functionality is to have a secondary server available running the MySQL database software. To configure that secondary server you would follow the same prerequisites for a normal installation of Toolpack on that machine. One thing to note with this change is that the IP address for the primary, as well as the secondary server, must now be made explicit (using ‘localhost’ or ‘127.0.0.1’ is no longer permitted). You will also want to activate database replication functionality using MySQL’s procedures for achieving this.

- Figure 2: Server scenarios

Testing this feature

While we expect enhanced HA functionality to have a major positive impact on your operations, HA is like an insurance policy in that you hope you never have to use it and it is not something you necessarily want to test on production servers. Consequently, it is the area where we expect to spend significant testing efforts. We have identified the following items as being worthy of testing and we recommend that they be part of your testing efforts as well.

You should expect different effects on the system depending on the test operations performed. These can be divided into two broad categories. The first is where the system will NOT drop any calls and will still accept new incoming calls and calls that are currently being processed (transient calls). The second is when established calls will NOT be dropped but the system will not be able to accept transient calls. Please note that this ‘interruption’ is temporary in nature, lasting only so long as the the switchover; once the switchover is complete, any incoming calls will be accepted again.

MySQL operations (no effect on established calls, still able to accept transient calls)

- Shutdown the MySQL service on master

- Kill MySQL service on master

- Shutdown MySQL service on slave

- Kill MySQL service on slave

Toolpack operations

- Quit (graceful) master Toolpack service: (no effect on established calls, will drop transient calls)

- Quit (graceful) slave Toolpack service: (no effect on established calls, still able to accept transient calls)

Toolpack applications operations

- Master

- tboamapp

- toolpack_sys_manager (no effect on established calls, still able to accept transient calls)

- toolpack_engine (no effect on established calls, will drop transient calls)

- gateway (no effect on established calls, will drop transient calls)

- Slave

- tboamapp

- toolpack_sys_manager (no effect on established calls, still able to accept transient calls)

- toolpack_engine (no effect on established calls, still able to accept transient calls)

- gateway (no effect on established calls, still able to accept transient calls)

Host operations

- Master (no effect on established calls, will drop transient calls)

- Disconnect the network(s)

- Shutdown the server (or disconnect power)

- Slave (no effect on established calls, still able to accept transient calls)

- Disconnect the network(s)

- Shutdown the server

Following a switchover, you will want to test the following items and verify that there are no errors:

- Established calls are still open

- New incoming calls are accepted

- The system closes established calls

- You are able to switch configurations (this requires a configuration reload)

- You are able to change the state (Run / Don't Run) of an application

- You are able to enable / disable trunks (this requires a configuration reload)

- You are able to enable / disable stacks ( SS7, SIP, ISDN) (this requires a configuration reload)

- You are able to add/ remove / modify gateway routes (this requires a configuration reload)

- You are able to add / remove / modify gateway accounts (this requires a configuration reload)

Known limitations

We have identified the following limitations with the enhanced high availability support available in Toolpack v2.3.

- It is not always possible to recover from a double fault. For example, should both configuration databases go offline, any telephony applications that use these databases will cease to function correctly.